Static Analysis Methods for Neural Networks

Abstract

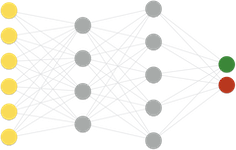

Formal methods provide rigorous guarantees of correctness for both hardware and software systems. Their use is well established in industry, notably to certify safety of critical applications subject to stringent certification processes. With the rising prominence of machine learning, the integration of machine-learned components into critical systems presents novel challenges for the soundness, precision, and scalability of formal methods. This talk serves as an introduction to formal methods tailored for machine learning software, with a focus on static analysis methods for neural networks. We will present several verification approaches, highlighting their strengths and limitations, through the lens of different (hyper)safety properties. A neural network surrogate from a real-world avionics use case will serve as a running example. We will additionally survey the application of these verification approaches towards the additional goal of enhancing machine learning explainability. We will conclude with perspectives on possible future research directions in this rapidly evolving field.