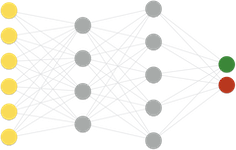

Abstract Domains for Machine Learning Verification

Abstract

Machine learning (ML) software is increasingly being deployed in high-stakes and sensitive applications, raising important challenges related to safety, privacy, and fairness. In response, ML verification has quickly gained traction within the formal methods community, particularly through techniques like abstract interpretation. However, much of this research has progressed with minimal dialogue and collaboration with the ML community, where it often goes underappreciated. In this talk, we advocate for closing this gap by surveying possible ways to make formal methods more appealing to the ML community. We will survey our recent and ongoing work in the design and development of abstract domains for machine learning verification, and discuss research questions and avenues for future work in this context.