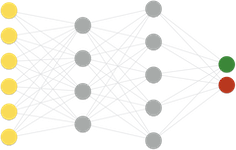

Verifying Attention Robustness of Deep Neural Networks against Semantic Perturbations

Abstract

In this paper, we propose the first verification method for attention robustness, i.e., the local robustness of the changes in the saliency-map against combinations of semantic perturbations. Specifically, our method determines the range of the perturbation parameters (e.g., the amount of brightness change) that maintains the difference between the actual saliency-map change and the expected saliency-map change below a given threshold value. Our method is based on linear activation region traversals, focusing on the outermost boundary of attention robustness for scalability on larger deep neural networks.

Type

Publication

Date

2022